Network Traffic Generation Tool

Published:

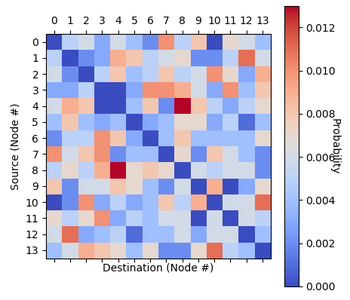

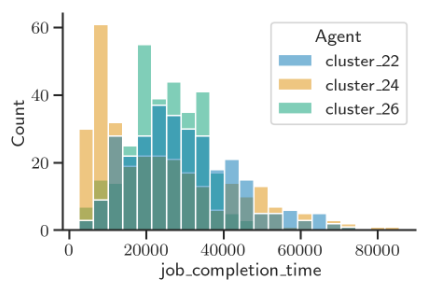

(Paper One) (Paper Two) (Paper Three) (GitHub) (Documentation) Data related to communication networks is often sensitve and proprietary. Consequently, many networking academic papers are published without open-accessing the network traffic data that was used to obtain the results, and when they are published the datasets are often too limited for data-hungry applications such as reinforcement learning. In an effort to aid reproducibility, some authors release characteristic distributions which broadly describe the underlying data. However, these distributions are often not analytically described and may not fall under the classic ‘named’ distributions (Gaussian, log-normal, Pareto etc.). As a result, other researchers find themselves using unrealistically simple uniform traffic distributions or their own distributions which are difficult to universally benchmark. This project saw the development of an open-access network traffic generation tool for (1) standardising the traffic patterns used to benchmark networking systems, and (2) enabling rapid and easy replication of literature distributions even in the absence of raw open-access data.